How Social Media Is Locking You in an Echo Chamber (And How to Get Out)

We live in the era of the For You Page. These curated, personalized feeds dictate what we see on apps like Instagram, TikTok, Pinterest, YouTube, Snapchat, and virtually every other social media and content platform out there. Your feed will never be quite the same as anyone else’s, with every piece of content you see specifically designed to keep you hooked, interested, and engaged enough to stay on your app of choice.

How do social media platforms know what content to serve you? It’s a process performed by algorithms, which are essentially a set of rules and calculations that power your social media experience. We’ve covered these algorithms in the past, explaining the mysterious ways they work and how often they’re changed and tweaked as platforms like TikTok and Instagram adjust their strategies. The important thing to understand is that your entire experience of scrolling through these apps is controlled by content algorithms, which make subtle adjustments for each individual user based on their preferences, behaviour, demographics, and social connections.

Originally, content algorithms like these were touted as a way to personalize the experience to every user. And while it’s true that personalized content recommendations are definitely more engaging than, say, being forced to watch whatever is on cable TV (remember that?), it’s vital to remember that these algorithms are not just here to enhance your content-consumption experience.

In this article, we’re diving into the murky world of algorithmic echo chambers, examining the ways that programmed content preferences are deepening divides among us, radicalizing youth, and conditioning us to expect everything we read, watch, and hear to conform to our views of the world. We’ll look at what social media companies, corporations, and governments stand to gain from isolating us in these neat compartments of worldviews and perspectives, examine the possible biases and agendas present in so-called ‘blank slate’ algorithms, and offer some ideas on what you can do to break out of your own echo chamber.

Are You In an Echo Chamber?

We tend to think of ourselves as informed, unbiased, open-minded people. We have our opinions, but they are conclusions we drew ourselves based on the information we sought out. We search through the headlines, read between the lines, and draw out nuanced judgments about the things we learn. Or at least, that’s the way we tend to think of ourselves.

The fact is, if you are on social media, or get your news through an aggregate, or simply like to scroll TikTok before bed, you are in an echo chamber. This isn’t your fault, per se, but rather is a fact of relying on algorithms to find content to read and watch.

Like so many other terms, the idea of an echo chamber has been highly politicized and weaponized over the past few years as political discourse becomes more divisive. Right-wingers accuse liberals of being stuck in ‘woke’ echo chambers, oblivious to the supposed existential threats of immigration or trans rights. Left-wingers say the right are in chambers of their own, a climate of fear-based xenophobia and prejudice that allows them to slip further into conservative authoritarianism or worse.

Echo chambers don’t end at politics, either. A lack of politicization has the power to be just as isolating, feeding people who ‘aren’t into politics’ enough low-stakes memes and viral recipe videos to forget about the state of our world. On the other end, we have people who are so wrapped up in the world’s tragedies and atrocities that they believe the future of our species falls personally to them, preventing them from engaging in their real-life communities and putting them in a state of dread-induced paralysis.

Another common type of echo chamber is a sexual one, with a feed dominated by lewd content of OnlyFans models, softcore content of couples in intimate situations, and misogynist ideology thinly veiled by relatable humour and irony. For young, impressionable men, this can lead to a hypersexual view of women and the world in general, which can easily segue into troubling subcultures with real-world implications, such as the online incel movement.

While no one’s content algorithm is the same, one common thread runs between all of them: None of us are getting the full story about anything, at least not if you let your FYP dictate the content you see. But why has the world of online entertainment and discourse taken this severe turn into insular pockets of unchallenged opinions accepted at face value? The answer is, of course, for profit.

Echo Chambers: A Profit Machine

Platforms like Instagram make their money from advertisers, and that only happens if people are there to see the ads. The longer Instagram can keep you on the platform at a time, the more money they make from your attention. Meaningful messages and genuine content are great for this, but these things take time, effort, and the all-important resource of attention to make and to consume. But there’s something that is far easier to make, disperse, and digest: Fear.

Wherever you land on the political spectrum, our world is an uncertain and sometimes frightening place. You might fear for our economic future if you run a small business. You might be afraid of losing your rights as a queer person. You might worry about illegal immigrants importing crime to your neighbourhood. You might think the President of the United States is ushering in a new era of hatred as mainstream opinion. You may think he’s the only thing standing between the free world and total anarchy. In a way, it doesn’t matter what you think, because your favourite social media app is all too happy to serve you up the perfect blend of content to help reinforce the opinions you have, only challenging them to make you angry or afraid, rather than to change your mind.

Social media giants have learned that it’s not enough to stimulate you with one Reel or TikTok at a time. The real money lies in creating communities, selling people the idea of a group that thinks just like them—a group they can only access through social media.

If this is throwing anyone else back to high school English class, we’ll say it with you: It’s not all that dissimilar from the idea of homogeneous ‘groupthink’, a term coined by George Orwell in his dystopian literary classic 1984. But unlike that story, this form of groupthink isn’t directly imposed on us by an authoritarian government, but instead is something many of us gravitate towards voluntarily (though not always knowingly), as people become more entrenched in their beliefs, politics, and worldviews through the constant reinforcement of our likeminded online communities.

The Loss of Decision in Media

If you’ve ever found yourself struggling to find something to watch on Netflix or in the midst of a music rut on Spotify, you know the feeling of fatigue that comes with having to make choices about the media you consume in a post-algorithm world. Consumption is easy when the content is chosen for you, only now we have access to a theoretical infinity of videos and other media, rather than being forced to watch whatever infomercial is running on late-night television.

As the type of content we can be served expands, our willingness to seek out things that challenge us is limited. Over time, you feed the algorithm with every like, comment, and share. Even hovering on one video for a few seconds longer than normal indicates higher interest to the algorithm, signalling it to keep sending you more things like it. This can lead to echo chambers of all kinds, whether it’s sending you into the arms of the radical right, encouraging you to view women as inherently sexual beings, or promoting doom-soaked, performatively leftist views of a lost future.

We spend huge amounts of our time consuming this algorithmically curated content, and bit by bit, it erodes our critical thinking. The opinions we form in these digital rubber rooms begin to invade our perception of the real world as well as the online one, where the only thing resembling dissent or argument is bait content designed to frighten and upset you.

There once was a time when we had to make mindful, deliberate choices about every piece of media we consumed. We bought albums, went to the cinema, and rented DVDs. We read books that friends recommended to us. We visited more separate websites in a day than most modern people use in a year. Now, every bit of your online experience is provided to you, each thirst-trap or fearmongering, tinfoil hat diatribe presented with equal weight and validity. It doesn’t matter what you think about each video, only how long you spend on it and where on your screen you press. Each piece of content feeds off the last, pulling you bit by bit into a digital niche that exists solely to capture as much of your time, emotion, and attention as possible.

How to Start Reclaiming Your Attention

Odds are, you don’t think of yourself as being in an echo chamber. We all consider ourselves free-thinkers, immune to the social engineering and propaganda that the ‘other side’ (whoever that is to you) is so clearly indoctrinated by. But zoom out, check yourself, and look at your algorithm objectively: Is this really presenting a nuanced, multifaceted view of the world? Or is it boiling it down to a simple but appealing emotional crutch, making it easy to confirm your biases and let your opinions go unchallenged?

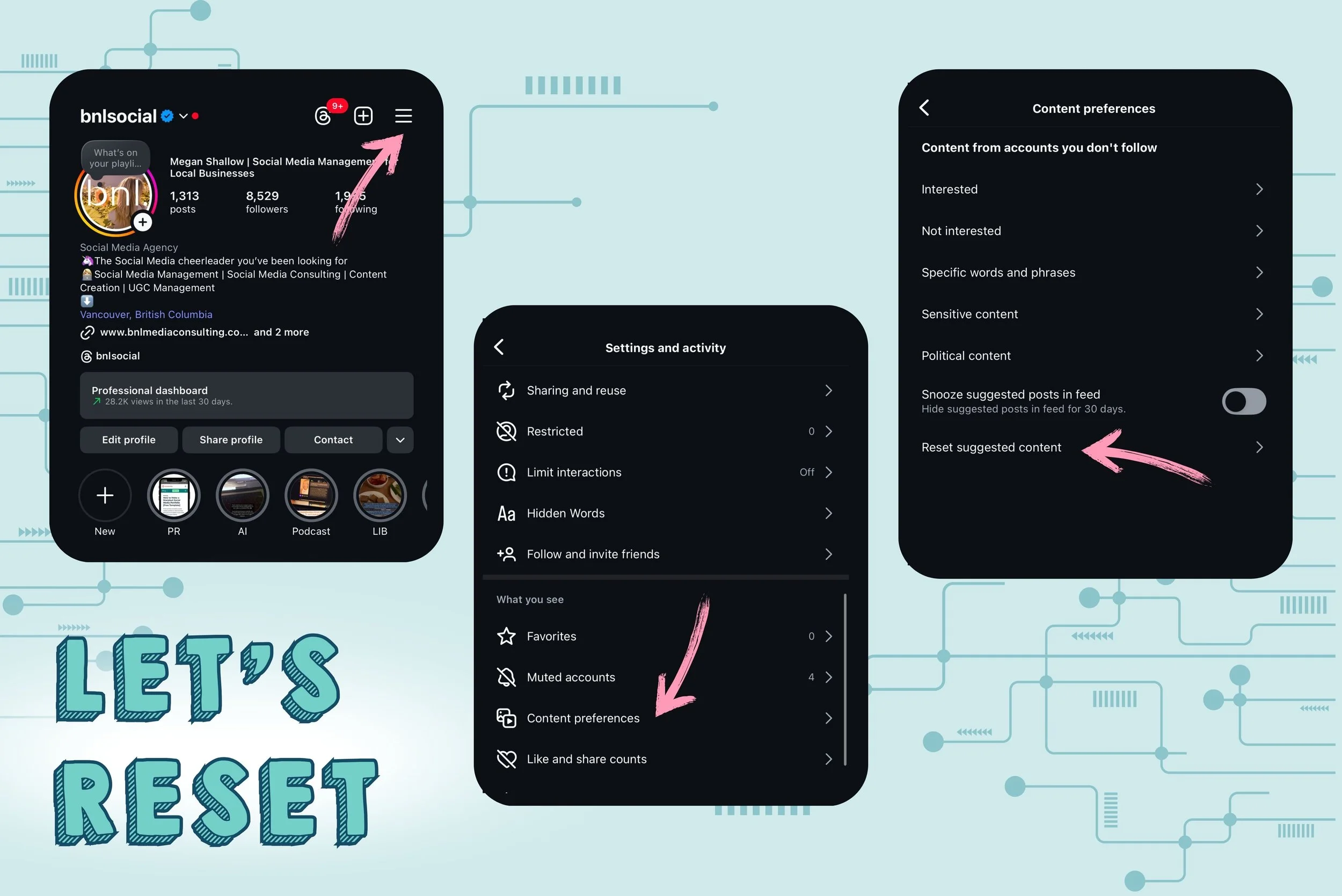

If you want to see just how fine-tuned your algorithm has become (and what a horrifying wasteland social media can really be without them), here’s something to try. Head to ‘Settings’ in your Instagram app, then ‘Content Preferences’, then ‘Reset Suggested Content’. This permanently clears your content algorithm, leaving Instagram with only your age, gender, location, device, and the interests of your friends to make recommendations. (Side note for anyone else looking to cut down on screen time: This is a great way to make Instagram Reels WAY less fun.)

Take a scroll through Reels or your FYP, and take note of what you find. Between the OnlyFans models, misogyny under the guise of relatable relationship humour, and AI-generated slop, what shows up on your feed?

Keep scrolling, and notice the ways the algorithm tests you as it works out the things that grab your attention. Here’s a woman in a bikini. Now a man eating a monkey. A scathing, ‘ironic’ meme about immigrants being deported. A call-to-action against fascism in America. A kid getting hurt on his bicycle. A joke about hitting your girlfriend. A cat in a bonnet. A Palestinian mother begging for help. An American mother preparing a meal. An ad for an unremovable bracelet to put on your partner. A shocking news headline without a source in sight. A beautiful woman blowing a kiss. A horrible accident. A joke. A tragedy. A joke. Again, and again, and again.

Bit by bit, innocuous ‘joke’ by joke, this algorithmic machine compiles its profile of you. Your mind, your heart, your attention. Not to help show you the world, or expand your horizons, but to shrink them. To neatly sort you into one box or another, only to position a funnel of never-ending content directly overhead and bury you in it. It’s not an insidious mind-control program designed to pacify the masses, at least not in the way some believe, but a mundane and predictable ploy to pry a few more seconds of your time out of your life and into their bottom line. So what’s the solution?

Escaping Your Echo Chamber

There is a desire to break free from these echo chambers. Services like Ground News have built their business around the growing niche of people looking to personally vet the news they read and buck the yoke of rigid partisan thought. Kids are getting back into books, a mindful, slow form of media that runs counter to today’s lightning-fast content landscape and demands us to make our own conclusions.

We see these attempts to return to a simpler, slower time everywhere, whether it's the ever-increasing importance of nostalgia across all media, the resurgence of the '90s VHS aesthetic, or the return of Y2K as the fashion movement of choice for Gen Z and Gen Alpha. These don’t address the issues of modernity, but they are in response to them—a sign that young people are feeling the pain and pressure of existing across these hyperconnected, yet deeply divided groups.

We can get out of our echo chambers. By recognizing the ways that algorithms manipulate us into a constant swing between smug self-righteousness and indignant outrage, we can start to see that politics, current events, and sexuality are not nearly as black and white as some would have you believe. Here are a few ideas on breaking out of these echo chambers, even if it’s just from time to time:

Take Back Your Choice: Choose more of the media and art you consume, picking out books or finding movies rather than letting algorithms serve you everything you see.

Art, Not Content: In general, look for ways to engage with art over content—find creators who make things that inspire you, and let that interest flourish.

Look for the Truth: When reading the news, go out of your way to read coverage of the same story from multiple sources—different news outlets report on things according to their agendas, not to give you the full story. Question everything, especially when it tells you exactly what you want to hear.

Learn the Signs of Bad Info: Educate yourself about online misinformation tactics (such as deepfakes), ensuring you don’t get caught up in hoaxes and half-truths.

Stop and Think: Always do your own research through trustworthy sources before believing or acting on claims you read online, especially when it comes to geopolitics and current events. Ask yourself what the poster of this content has to gain from putting it out there.

Talk to People: Whenever possible, try to talk to people with different opinions from you (ideally in real life!), approaching the conversation with empathy and an open mind. You share a lot more in common with the people on the other side of the aisle than you may realize. Look for what unites you before picking apart the differences.

Be Willing to Change Your Mind: Ignorance is stubborn; wisdom is reasonable. No one knows everything, and there’s nothing wrong with changing your views when presented with new, compelling information.

Address Echo Chambers at the Source: You may not like it, but there’s no better way to reduce your algorithm’s power over you than to simply reduce its access. In other words? Try and get that screen time down, and spend more time in the real world! You’ll soon start to see that, really, most people are good, capable of love, and just trying their best to make it in a tough world—and guess what? You don’t have to agree on everything to get along.

Hope is not lost for intelligent discourse, productive arguments, and the possibility of changing someone else’s mind—or your own. But to make this possible, we have to confront the comfortable, golden shackles of our algorithms and make our own, private decisions to set them down.